Gyro to LiDAR: Building Smarter AR Games

In the dynamic world of mobile game development, the integration of advanced sensors like gyroscopes, accelerometers, and LiDAR has redefined the realm of possibilities. Utilizing powerful game engines such as Unity and Unreal Engine, developers can craft immersive experiences that respond to real-world motion and environments. This article delves into the methods and advantages of using these technologies for iOS and Android game development, particularly with ARKit and ARCore.

Mobile gaming has evolved from simple tap-and-swipe mechanics to complex interactions that mimic console-level depth. With the widespread availability of advanced sensors in smartphones, developers can now create games that respond dynamically to the player’s environment and actions.

Historical Background of Mobile Gaming

Initially, mobile games were limited by the hardware capabilities of early devices, focusing mainly on basic graphics and simple mechanics. As technology advanced, so did the potential for more complex games, with the introduction of touchscreens bringing a new dimension of interaction. The emergence of smartphones with integrated sensors marked a significant leap, enabling developers to incorporate real-world elements into gameplay.

Impact of Sensors on Game Design

The introduction of sensors has transformed how developers approach game design. Sensors such as gyroscopes and accelerometers allow for more intuitive and engaging gameplay mechanics, such as motion control and environmental interaction. This evolution has expanded the possibilities for storytelling and player immersion, allowing games to transition from static experiences to dynamic, responsive environments.

Case Studies in Sensor Utilization

Games like “Pokémon GO” and “Ingress” have set precedents in utilizing sensor technology to create engaging experiences. “Pokémon GO” leverages GPS and camera data to overlay digital creatures in the real world, encouraging exploration. Similarly, “Ingress” uses location data to turn real-world locations into virtual game elements, creating an interactive map that players can engage with.

The Role of Gyroscopes and Accelerometers

Gyroscopes and accelerometers are pivotal in enhancing user interaction. These sensors detect rotation and acceleration, enabling developers to implement motion-based controls. Games like “Pokémon GO” and “Ingress” have capitalized on these features, offering a gameplay experience that encourages physical movement and exploration.

Understanding Gyroscopes and Their Applications

Gyroscopes measure the orientation of a device, providing data on how it is tilted or rotated. This information is crucial for creating immersive experiences, such as simulating the steering of a car or the navigation of a virtual world. By integrating gyroscope data, developers can design games that require players to physically move their devices, enhancing engagement through realistic control schemes.

Accelerometers in Motion-Based Gaming

Accelerometers detect changes in velocity, making them ideal for implementing features like shaking, tilting, or sudden movements. This sensor is often used in fitness and rhythm-based games, where player actions directly influence gameplay. The accelerometer’s ability to detect subtle movements adds depth to the gaming experience, allowing for precise control and interaction.

Combining Sensors for Enhanced Gameplay

When used together, gyroscopes and accelerometers can create complex, multi-dimensional gameplay experiences. By synchronizing data from both sensors, developers can achieve a level of realism that mimics real-world physics. This combination allows for innovative game mechanics, such as augmented reality (AR) experiences where players interact with their environment in natural and intuitive ways.

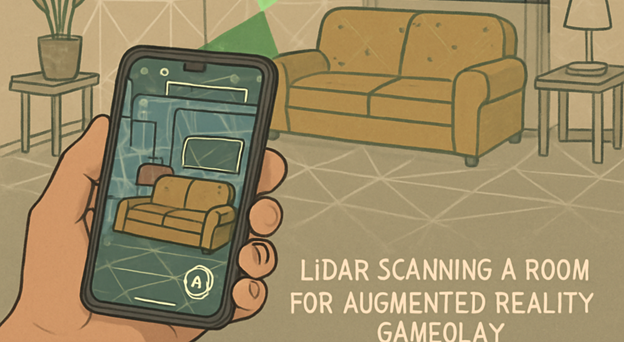

Leveraging LiDAR for AR Games

LiDAR technology, primarily found in modern iOS devices, offers unprecedented depth perception and environmental mapping. This allows developers to create augmented reality (AR) games with a higher degree of precision and interactivity.

The Mechanics of LiDAR Technology

LiDAR, or Light Detection and Ranging, uses laser pulses to measure distances and create detailed 3D maps of the surrounding environment. This technology is invaluable for AR games, as it enables accurate placement of virtual objects within real-world settings. By understanding the spatial layout, developers can craft experiences that feel grounded and believable.

Enhancing AR with LiDAR’s Precision

The precision offered by LiDAR allows for more immersive and interactive AR experiences. Developers can create realistic occlusion effects, where virtual objects are accurately obscured by real-world elements. This level of detail enhances the player’s sense of presence and immersion, making virtual interactions more convincing and engaging.

Challenges and Opportunities in LiDAR Integration

While LiDAR presents exciting possibilities, it also introduces challenges in terms of development complexity and device compatibility. Developers must consider the varying capabilities of devices and optimize their games accordingly. However, the opportunities for creating groundbreaking AR experiences are vast, with LiDAR paving the way for new genres and gameplay mechanics.

Implementing ARKit and ARCore

Apple’s ARKit and Google’s ARCore are frameworks that facilitate the creation of AR experiences. These platforms support robust tracking and environmental understanding, which are crucial for sensor-integrated gaming.

ARKit: Expanding iOS AR Capabilities

ARKit provides iOS developers with tools to create seamless AR experiences by leveraging device sensors, including LiDAR. Features like people occlusion, motion capture, and scene geometry allow for realistic interactions. The framework’s ease of use and integration with Apple’s ecosystem makes it a powerful tool for developers looking to push the boundaries of AR gaming andiOS game development. By combining ARKit with robust game development practices, creators can deliver immersive, interactive experiences tailored specifically for Apple devices.

ARCore: Google’s Approach to AR on Android

ARCore offers similar capabilities on Android devices, using camera and sensor data to blend virtual content with the real world. Its features, such as light estimation and environmental understanding, enable consistent and realistic AR interactions. ARCore’s flexibility and cross-platform support make it an attractive choice for developers aiming to reach a broad audience.

Comparing ARKit and ARCore for Game Development

Choosing between ARKit and ARCore often depends on the target platform and desired features. While both offer robust AR capabilities, ARKit’s integration with Apple’s ecosystem provides a seamless experience for iOS users. Conversely, ARCore’s wide device compatibility and adaptability make it ideal for reaching Android’s diverse market. Developers should weigh the strengths of each framework when planning their AR projects.

Unity vs. Unreal: Choosing the Right Engine

by KOBU Agency (https://unsplash.com/@kobuagency)

The choice between Unity and Unreal Engine often hinges on the specific needs of a project. Both engines offer distinct advantages for sensor-integrated mobile game development.

Unity: Versatility and Ease of Use

Unity is renowned for its flexibility and extensive asset store, making it an excellent choice for developers looking to create mobile games quickly. Its user-friendly interface and comprehensive documentation make it accessible to developers at all levels.

- Pros: Extensive community support, cross-platform capabilities, and an intuitive interface.

- Cons: May require additional plugins for advanced graphics or features.

Unreal Engine: Graphics and Performance

Unreal Engine stands out for its powerful graphics capabilities and high-performance rendering. It is ideal for developers aiming to push the boundaries of visual fidelity and realism in their games.

- Pros: Superior graphics capabilities, built-in tools for VR and AR development.

- Cons: Steeper learning curve and higher system requirements.

Factors to Consider When Choosing an Engine

When selecting a game engine, developers must consider factors such as project scope, team expertise, and budget. Unity’s simplicity and robust community support make it ideal for smaller teams and projects. In contrast, Unreal Engine’s advanced graphics capabilities are better suited for projects with higher visual demands and more substantial resources. Understanding these factors can guide developers in making an informed decision.

Building Sensor-Integrated Games: Best Practices

- Understand Sensor Limitations: Different devices have varying sensor capabilities. Developers should design games with these limitations in mind to ensure a consistent experience across platforms.

- Optimize for Performance: Sensor data processing can be resource-intensive. Efficient code and optimization techniques are essential to maintain smooth gameplay.

- Focus on User Experience: While sensor integration can enhance gameplay, it should not compromise usability. Controls must be intuitive, and the game should guide users in leveraging sensor-based interactions effectively.

Designing for Device Variability

Developers must account for the diverse range of devices and their sensor capabilities. Designing adaptable gameplay mechanics ensures that games perform well across different hardware configurations. Testing on various devices can help identify potential issues and optimize the experience for the widest audience.

Strategies for Performance Optimization

Efficient processing of sensor data is crucial for maintaining responsive gameplay. Developers should employ techniques such as data caching and multithreading to minimize lag and ensure smooth interactions. Continuous performance testing throughout development helps identify bottlenecks and refine the game’s efficiency.

Enhancing User Experience with Intuitive Controls

Intuitive controls are vital for user engagement, particularly when integrating complex sensor-based interactions. Developers should focus on creating seamless and natural control schemes that align with real-world actions. User feedback and iterative testing can refine these controls, ensuring they meet player expectations and enhance the overall experience.

The Future of Sensor-Integrated Gaming

As mobile technology continues to advance, the potential for sensor-integrated gaming will only expand. Developers who master these tools and techniques will be poised to create games that are not only entertaining but also innovative and engaging.

Emerging Trends in Sensor Technology

As sensor technology evolves, new opportunities for game development will emerge. Innovations in fields such as wearable technology and biometric sensors could lead to even more immersive and personalized gaming experiences. Developers should stay informed about these trends to leverage new capabilities as they become available.

The Growing Importance of AR in Mobile Gaming

Augmented reality is becoming an increasingly integral part of the mobile gaming landscape. As more devices incorporate advanced sensors like LiDAR, the potential for AR games to offer rich, immersive experiences will grow. Developers who invest in AR technologies now will be well-positioned to take advantage of this expanding market.

Preparing for the Future: Skills and Tools

To succeed in the evolving world of sensor-integrated gaming, developers must continually update their skills and knowledge. Familiarity with the latest game engines, frameworks, and sensor technologies is essential. By staying adaptable and embracing new tools, developers can create groundbreaking games that redefine the mobile gaming experience.

In conclusion, the integration of sensors such as gyroscopes, accelerometers, and LiDAR into mobile games, with the support of Unity and Unreal Engine, opens new avenues for creativity and immersion. By understanding and implementing these technologies, developers can craft experiences that captivate and inspire players, setting new standards in the mobile gaming industry.